Reflecting on the thought-provoking work1 of Sharra Vostral, it’s intriguing to explore how her concept of “BIOLOGICALLY INCOMPATIBLE TECHNOLOGY” can be applied in the current digital age.

In the ’80s, an innovative product was launched amid much fanfare – a smaller, lighter, and super absorbent tampon.

Unfortunately, this new product led to an outbreak of Toxic Shock Syndrome (TSS), especially among young women. The company said that the product itself was harmful, but in a complex interplay of variables, it enabled Staphylococcus aureus to reproduce rapidly, producing a deadly toxin. This concept has since been recast as “biocatalyst technologies” in her latest book, “Toxic Shock,” which has truly captured my attention.

Ultimately, that particular tampon was removed from the market, but its users were blamed for using it “incorrectly,” a notion that persists and contributes to the stigma surrounding TSS today. The prevailing sentiment seems to be that if someone suffers from TSS, it’s her fault.

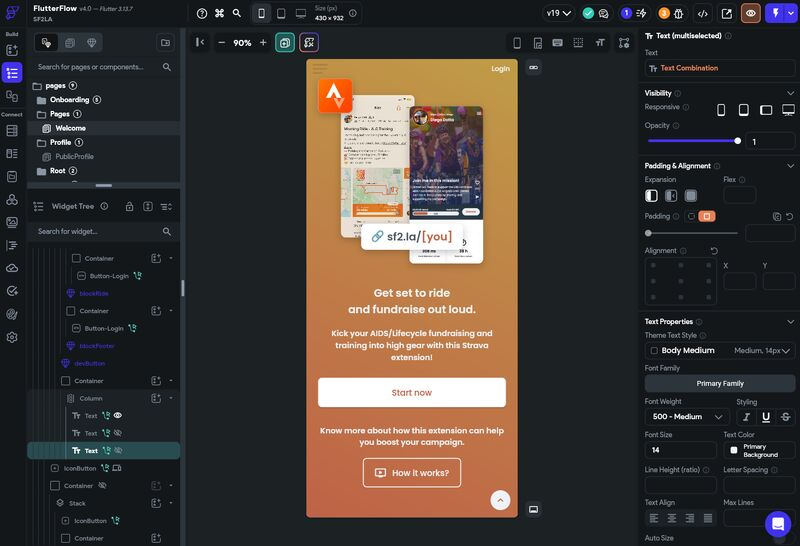

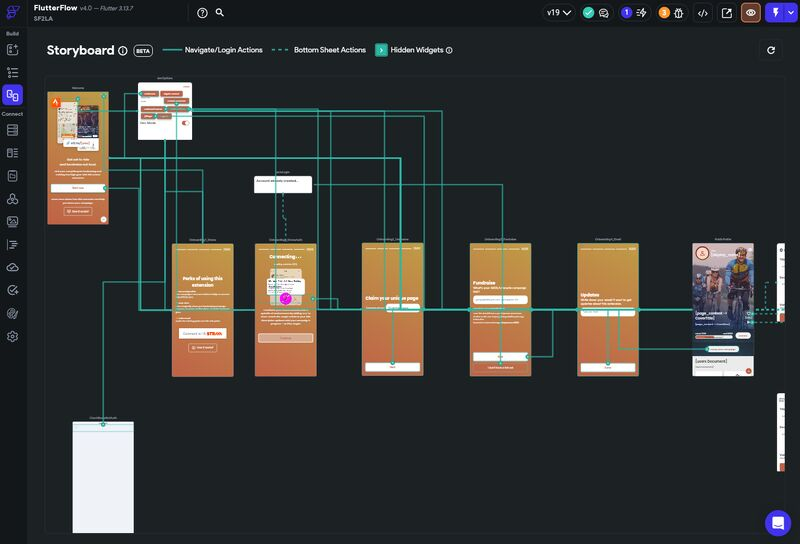

Fast forward to the present day, we are surrounded by new digital projects, many of which have unanticipated outcomes. Social media has often been implicated in a rise in depression and anxiety², and thousands of novel digital solutions are emerging. Particularly alarming is the sharp increase in suicides among young women², which brings to mind the young women affected by TSS decades ago.

These parallels lead me to ask:

– Are we, as product innovators and leaders, unintentionally developing what could be deemed “psychologically incompatible technology?”

– Are we fostering an era of anxiety and depression-catalyst technologies? Like a Digital Toxic Shock?

– Will we blame the users (again) if something unexpected happens?

We must remember to keep human well-being at the heart of technological advancement.

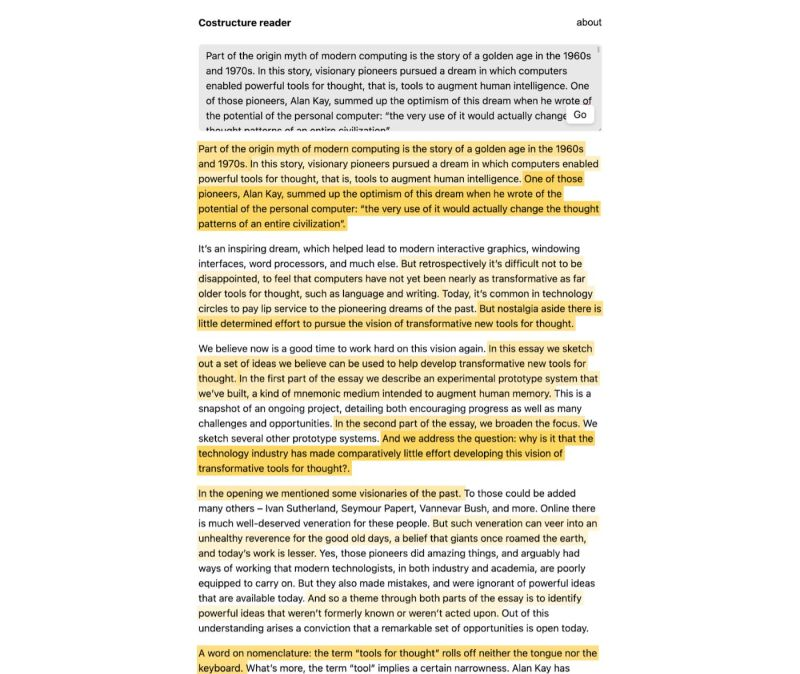

For a deeper dive, here are the articles that helped me connect these ideas:

1. Rely and Toxic Shock Syndrome: A Technological Health Crisis

2. Jonathan Haidt and Zach Rausch‘s research on the teen mental health crisis

3. Almost a Third of High-School Girls Considered Suicide in 2021